En este notebook, veremos un ejemplo (muy simple!) aplicado de la metodología de clasificación vista en el cursos. Para ello, utilizaremos el (muy popular) conjunto de datos Titanic. La tarea de aprendizaje será predecir, dado un pasajero del Titanic, si sobrevivirá.

import numpy as np

import pandas as pd

import sklearn

import sklearn.preprocessing

import sklearn.feature_selection

import sklearn.model_selection

import graphviz

import scipy.stats

Ejemplo: Titanic Dataset: listado de pasajeros del Titanica, indicando si sobrevivieron o no. Más detalles aquí.

titanic=pd.read_csv('https://raw.githubusercontent.com/pln-fing-udelar/curso_aa/master/data/titanic.csv')

titanic

| row.names | pclass | survived | name | age | embarked | home.dest | room | ticket | boat | sex | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 1st | 1 | Allen, Miss Elisabeth Walton | 29.0000 | Southampton | St Louis, MO | B-5 | 24160 L221 | 2 | female |

| 1 | 2 | 1st | 0 | Allison, Miss Helen Loraine | 2.0000 | Southampton | Montreal, PQ / Chesterville, ON | C26 | NaN | NaN | female |

| 2 | 3 | 1st | 0 | Allison, Mr Hudson Joshua Creighton | 30.0000 | Southampton | Montreal, PQ / Chesterville, ON | C26 | NaN | (135) | male |

| 3 | 4 | 1st | 0 | Allison, Mrs Hudson J.C. (Bessie Waldo Daniels) | 25.0000 | Southampton | Montreal, PQ / Chesterville, ON | C26 | NaN | NaN | female |

| 4 | 5 | 1st | 1 | Allison, Master Hudson Trevor | 0.9167 | Southampton | Montreal, PQ / Chesterville, ON | C22 | NaN | 11 | male |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 1308 | 1309 | 3rd | 0 | Zakarian, Mr Artun | NaN | NaN | NaN | NaN | NaN | NaN | male |

| 1309 | 1310 | 3rd | 0 | Zakarian, Mr Maprieder | NaN | NaN | NaN | NaN | NaN | NaN | male |

| 1310 | 1311 | 3rd | 0 | Zenn, Mr Philip | NaN | NaN | NaN | NaN | NaN | NaN | male |

| 1311 | 1312 | 3rd | 0 | Zievens, Rene | NaN | NaN | NaN | NaN | NaN | NaN | female |

| 1312 | 1313 | 3rd | 0 | Zimmerman, Leo | NaN | NaN | NaN | NaN | NaN | NaN | male |

1313 rows × 11 columns

Fase 1: Preprocesamiento¶

Atributos faltantes¶

Vamos a sustituir los atributos faltantes por el promedio de los valores

# Contamos cuántos NaN son

print("Cantidad de instancias sin valor: {0}".format(titanic['age'].isna().sum()))

# Vemos el promedio de edad de los sobrevivientes, según la clase

mean_age=titanic.mean()['age']

display(mean_age)

# Actualizamos con la mean_age de cada grupo

titanic.loc[titanic['age'].isna(),'age']=mean_age

Cantidad de instancias sin valor: 680

31.19418104265403

titanic

| row.names | pclass | survived | name | age | embarked | home.dest | room | ticket | boat | sex | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 1st | 1 | Allen, Miss Elisabeth Walton | 29.000000 | Southampton | St Louis, MO | B-5 | 24160 L221 | 2 | female |

| 1 | 2 | 1st | 0 | Allison, Miss Helen Loraine | 2.000000 | Southampton | Montreal, PQ / Chesterville, ON | C26 | NaN | NaN | female |

| 2 | 3 | 1st | 0 | Allison, Mr Hudson Joshua Creighton | 30.000000 | Southampton | Montreal, PQ / Chesterville, ON | C26 | NaN | (135) | male |

| 3 | 4 | 1st | 0 | Allison, Mrs Hudson J.C. (Bessie Waldo Daniels) | 25.000000 | Southampton | Montreal, PQ / Chesterville, ON | C26 | NaN | NaN | female |

| 4 | 5 | 1st | 1 | Allison, Master Hudson Trevor | 0.916700 | Southampton | Montreal, PQ / Chesterville, ON | C22 | NaN | 11 | male |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 1308 | 1309 | 3rd | 0 | Zakarian, Mr Artun | 31.194181 | NaN | NaN | NaN | NaN | NaN | male |

| 1309 | 1310 | 3rd | 0 | Zakarian, Mr Maprieder | 31.194181 | NaN | NaN | NaN | NaN | NaN | male |

| 1310 | 1311 | 3rd | 0 | Zenn, Mr Philip | 31.194181 | NaN | NaN | NaN | NaN | NaN | male |

| 1311 | 1312 | 3rd | 0 | Zievens, Rene | 31.194181 | NaN | NaN | NaN | NaN | NaN | female |

| 1312 | 1313 | 3rd | 0 | Zimmerman, Leo | 31.194181 | NaN | NaN | NaN | NaN | NaN | male |

1313 rows × 11 columns

Ejemplo Titanic (cont): convertimos el atributo sex en binario (toma valores en {0,1})

# Creamos un labelEncoder utilizando scikit-learn

le=sklearn.preprocessing.LabelEncoder()

# Obtenemos las clases a partir de los valores del conjunto de entrenamiento

le.fit(titanic['sex'])

# Mostramos las clases obtenidas

le.classes_

# Ajustamos el campo sex, transformándolo

titanic.loc[:,'sex'] = le.transform(titanic.loc[:,'sex'])

titanic

| row.names | pclass | survived | name | age | embarked | home.dest | room | ticket | boat | sex | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 1st | 1 | Allen, Miss Elisabeth Walton | 29.000000 | Southampton | St Louis, MO | B-5 | 24160 L221 | 2 | 0 |

| 1 | 2 | 1st | 0 | Allison, Miss Helen Loraine | 2.000000 | Southampton | Montreal, PQ / Chesterville, ON | C26 | NaN | NaN | 0 |

| 2 | 3 | 1st | 0 | Allison, Mr Hudson Joshua Creighton | 30.000000 | Southampton | Montreal, PQ / Chesterville, ON | C26 | NaN | (135) | 1 |

| 3 | 4 | 1st | 0 | Allison, Mrs Hudson J.C. (Bessie Waldo Daniels) | 25.000000 | Southampton | Montreal, PQ / Chesterville, ON | C26 | NaN | NaN | 0 |

| 4 | 5 | 1st | 1 | Allison, Master Hudson Trevor | 0.916700 | Southampton | Montreal, PQ / Chesterville, ON | C22 | NaN | 11 | 1 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 1308 | 1309 | 3rd | 0 | Zakarian, Mr Artun | 31.194181 | NaN | NaN | NaN | NaN | NaN | 1 |

| 1309 | 1310 | 3rd | 0 | Zakarian, Mr Maprieder | 31.194181 | NaN | NaN | NaN | NaN | NaN | 1 |

| 1310 | 1311 | 3rd | 0 | Zenn, Mr Philip | 31.194181 | NaN | NaN | NaN | NaN | NaN | 1 |

| 1311 | 1312 | 3rd | 0 | Zievens, Rene | 31.194181 | NaN | NaN | NaN | NaN | NaN | 0 |

| 1312 | 1313 | 3rd | 0 | Zimmerman, Leo | 31.194181 | NaN | NaN | NaN | NaN | NaN | 1 |

1313 rows × 11 columns

Ejemplo titanic (cont): transformamos el campo pclass utilizando one-hot-encoding:

# Utilizamos scikit-learn para crear un one-hot-encoder

ohe=sklearn.preprocessing.OneHotEncoder(sparse=False)

# Obtenemos las categorías a partir de los datos de entrenamiento

ohe.fit(titanic['pclass'].to_numpy().reshape(-1,1))

display(ohe.categories_)

# Obtenemos los nuevos valores a partir del valor original

new=ohe.transform(titanic['pclass'].to_numpy().reshape(-1,1))

# Creamos nuevos atributos

titanic['class_1st']=new[:,0]

titanic['class_2nd']=new[:,1]

titanic['class_3rd']=new[:,2]

[array(['1st', '2nd', '3rd'], dtype=object)]

titanic

| row.names | pclass | survived | name | age | embarked | home.dest | room | ticket | boat | sex | class_1st | class_2nd | class_3rd | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 1st | 1 | Allen, Miss Elisabeth Walton | 29.000000 | Southampton | St Louis, MO | B-5 | 24160 L221 | 2 | 0 | 1.0 | 0.0 | 0.0 |

| 1 | 2 | 1st | 0 | Allison, Miss Helen Loraine | 2.000000 | Southampton | Montreal, PQ / Chesterville, ON | C26 | NaN | NaN | 0 | 1.0 | 0.0 | 0.0 |

| 2 | 3 | 1st | 0 | Allison, Mr Hudson Joshua Creighton | 30.000000 | Southampton | Montreal, PQ / Chesterville, ON | C26 | NaN | (135) | 1 | 1.0 | 0.0 | 0.0 |

| 3 | 4 | 1st | 0 | Allison, Mrs Hudson J.C. (Bessie Waldo Daniels) | 25.000000 | Southampton | Montreal, PQ / Chesterville, ON | C26 | NaN | NaN | 0 | 1.0 | 0.0 | 0.0 |

| 4 | 5 | 1st | 1 | Allison, Master Hudson Trevor | 0.916700 | Southampton | Montreal, PQ / Chesterville, ON | C22 | NaN | 11 | 1 | 1.0 | 0.0 | 0.0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 1308 | 1309 | 3rd | 0 | Zakarian, Mr Artun | 31.194181 | NaN | NaN | NaN | NaN | NaN | 1 | 0.0 | 0.0 | 1.0 |

| 1309 | 1310 | 3rd | 0 | Zakarian, Mr Maprieder | 31.194181 | NaN | NaN | NaN | NaN | NaN | 1 | 0.0 | 0.0 | 1.0 |

| 1310 | 1311 | 3rd | 0 | Zenn, Mr Philip | 31.194181 | NaN | NaN | NaN | NaN | NaN | 1 | 0.0 | 0.0 | 1.0 |

| 1311 | 1312 | 3rd | 0 | Zievens, Rene | 31.194181 | NaN | NaN | NaN | NaN | NaN | 0 | 0.0 | 0.0 | 1.0 |

| 1312 | 1313 | 3rd | 0 | Zimmerman, Leo | 31.194181 | NaN | NaN | NaN | NaN | NaN | 1 | 0.0 | 0.0 | 1.0 |

1313 rows × 14 columns

# Eliminamos algunos atributos que no parecen relevantes.

# Esto podríamos afinarlo usando Feature Selection

titanic.drop(['row.names','pclass', 'name', 'embarked', 'home.dest', 'room', 'ticket', 'boat'], axis=1, inplace=True)

titanic

| survived | age | sex | class_1st | class_2nd | class_3rd | |

|---|---|---|---|---|---|---|

| 0 | 1 | 29.000000 | 0 | 1.0 | 0.0 | 0.0 |

| 1 | 0 | 2.000000 | 0 | 1.0 | 0.0 | 0.0 |

| 2 | 0 | 30.000000 | 1 | 1.0 | 0.0 | 0.0 |

| 3 | 0 | 25.000000 | 0 | 1.0 | 0.0 | 0.0 |

| 4 | 1 | 0.916700 | 1 | 1.0 | 0.0 | 0.0 |

| ... | ... | ... | ... | ... | ... | ... |

| 1308 | 0 | 31.194181 | 1 | 0.0 | 0.0 | 1.0 |

| 1309 | 0 | 31.194181 | 1 | 0.0 | 0.0 | 1.0 |

| 1310 | 0 | 31.194181 | 1 | 0.0 | 0.0 | 1.0 |

| 1311 | 0 | 31.194181 | 0 | 0.0 | 0.0 | 1.0 |

| 1312 | 0 | 31.194181 | 1 | 0.0 | 0.0 | 1.0 |

1313 rows × 6 columns

# Primero separamos las X de las y

titanic_X = titanic[['age','sex', 'class_1st', 'class_2nd', 'class_3rd']]

titanic_y = titanic[['survived']]

# Construimos los corpus de entrenamiento y test

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(titanic_X, titanic_y, test_size=0.25, random_state=33)

display(X_train.shape)

display(X_test.shape)

(984, 5)

(329, 5)

Fase 3: Entrenamiento¶

# Vamos a entrenar un árbol de decisión sobre los datos de entrenamiento

from sklearn import tree

clf = tree.DecisionTreeClassifier(criterion='entropy', max_depth=3 , min_samples_leaf=5)

clf = clf.fit(X_train,y_train)

src = graphviz.Source(tree.export_graphviz(clf, feature_names=['age','sex','1st_class','2nd_class','3rd_class']))

src

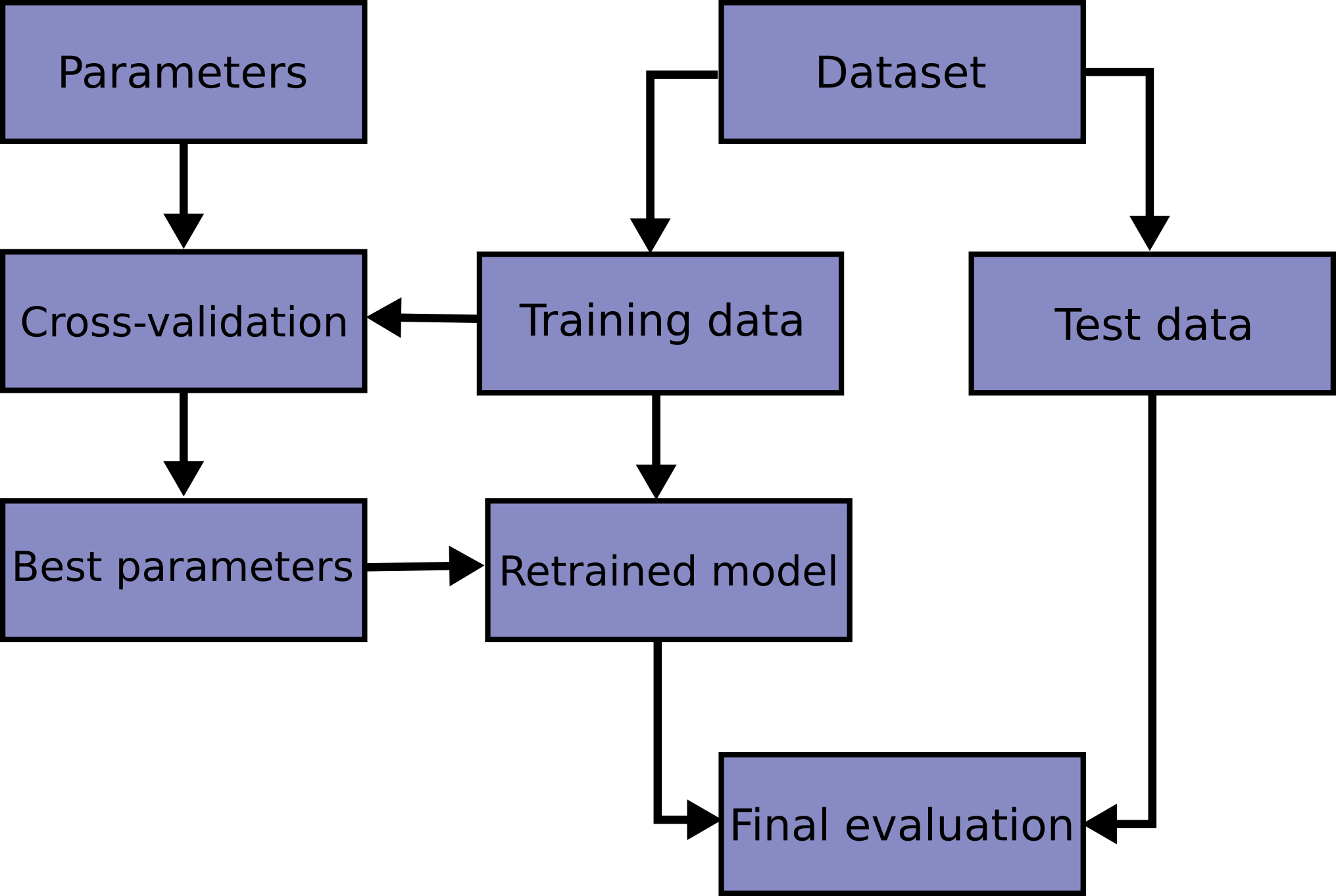

Validación cruzada y selección de modelos¶

# Hacemos cross validation para encontrar la mejor profundidad para el árbol

for md in range(10):

clf = tree.DecisionTreeClassifier(criterion='entropy', max_depth=md+1 , min_samples_leaf=5)

kf=sklearn.model_selection.KFold(n_splits=5)

scores=np.zeros(5)

score_index=0

for train_index, test_index in kf.split(X_train):

X_train_cv, X_test_cv= X_train.iloc[train_index], X_train.iloc[test_index]

y_train_cv, y_test_cv= y_train.iloc[train_index], y_train.iloc[test_index]

clf = clf.fit(X_train_cv,y_train_cv)

y_pred=clf.predict(X_test_cv)

scores[score_index]=metrics.accuracy_score(y_test_cv.astype(int), y_pred.astype(int))

score_index += 1

print ("Profundidad {0:d}, Accuracy media: {1:.3f} (+/-{1:.3f})".format(md+1, np.mean(scores), scipy.stats.sem(scores)))

Profundidad 1, Accuracy media: 0.786 (+/-0.786) Profundidad 2, Accuracy media: 0.833 (+/-0.833) Profundidad 3, Accuracy media: 0.827 (+/-0.827) Profundidad 4, Accuracy media: 0.825 (+/-0.825) Profundidad 5, Accuracy media: 0.823 (+/-0.823) Profundidad 6, Accuracy media: 0.825 (+/-0.825) Profundidad 7, Accuracy media: 0.823 (+/-0.823) Profundidad 8, Accuracy media: 0.824 (+/-0.824) Profundidad 9, Accuracy media: 0.824 (+/-0.824) Profundidad 10, Accuracy media: 0.826 (+/-0.826)

from sklearn import metrics

def measure_performance(X,y,clf, show_accuracy=True, show_classification_report=True, show_confusion_matrix=True):

y_pred=clf.predict(X)

if show_accuracy:

print ("Accuracy:{0:.3f}".format(metrics.accuracy_score(y,y_pred)),"\n")

if show_classification_report:

print("Classification report")

print(metrics.classification_report(y,y_pred),"\n")

if show_confusion_matrix:

print ("Confusion matrix")

print (metrics.confusion_matrix(y,y_pred),"\n")

# Construimos un clasificador con el mejor parámetro, y entrenamos sobre todo el conjunto de entrenamiento

clf_dt=tree.DecisionTreeClassifier(criterion='entropy', max_depth=2 ,min_samples_leaf=5)

clf_dt.fit(X_train,y_train)

measure_performance(X_test,y_test,clf_dt)

Accuracy:0.787

Classification report

precision recall f1-score support

0 0.77 0.94 0.84 202

1 0.85 0.54 0.66 127

accuracy 0.79 329

macro avg 0.81 0.74 0.75 329

weighted avg 0.80 0.79 0.77 329

Confusion matrix

[[190 12]

[ 58 69]]

Esto es así:

- 190 eran No sobrevive, y los clasificó bien. A 12 más los clasificó como que sobrevieron, pero no.

69 Sobrevivieron y los clasificó bien. a 58 más los clasificó como que No, y sobrevivieron.

Precisión para la clase 0: TP/(TP+FP) = 190 / (190 + 58) = 0.77 (Ojo que aquí "positivo" es que no sobrevivió)

- Recall para la clase 0: TP/(TP+FN) = 190/(190+12) = 0.94

- Precisión para la clase 1: 69/(58+69) = 0.54

- Recall para la clase 1: 69/(69+12) = 0.85

Predicción¶

titanic_X = titanic[['age','sex', 'class_1st', 'class_2nd', 'class_3rd']]

Rose = np.array([17,0,1,0,0]).reshape(1, -1)

y_pred=clf.predict(Rose)

display(y_pred)

Jack = np.array([23,1,0,0,1]).reshape(1, -1)

y_pred=clf.predict(Jack)

display(y_pred)

array([1], dtype=int64)

array([0], dtype=int64)